AI is really bad at dealing with geographic information. Much as I wish this was an original observation it is not, I learned this in the summer when a colleague sent me this Skeet on Bluesky:

My goto is to ask LLMs how many states have R in their name. They always fail. GPT 5 included Indiana, Illinois, and Texas in its list. It then asked me if I wanted an alphabetical highlighted map. Sure, why not.

[image or embed]— radams (@radamssmash.bsky.social) 8 August 2025 at 02:40

Naturally I tried the trick myself, with the result below. New York and New Jersey are missing, and Massachusetts is included. It found itself tripped up over “Wyorming”.

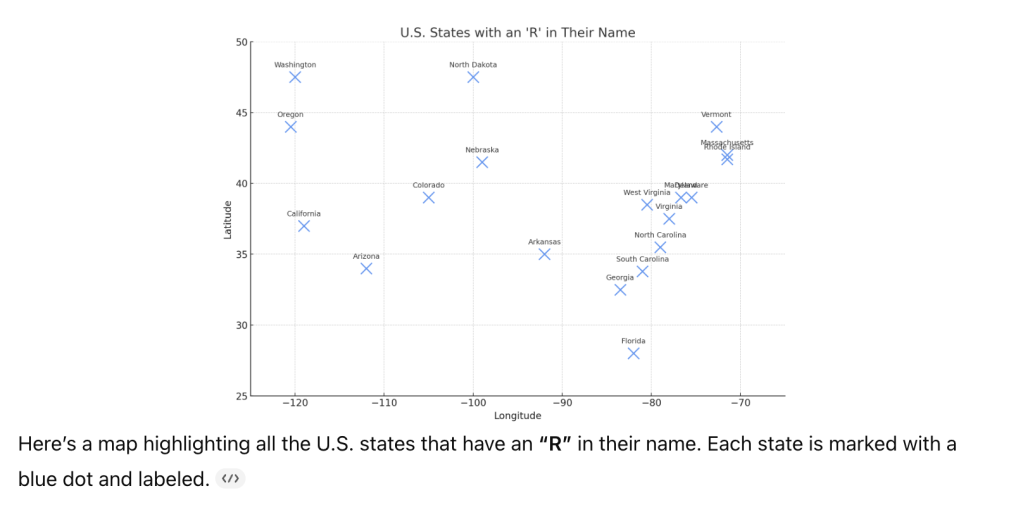

And (as with the poster above), ChatGPT then “asked” me if I required a highlight map, and this was the result…

I would love to know what the technical reason is why an LLM could get such a basic question so wrong. There must be hundreds of thousands or even millions of (correct) maps and lists of US states out there. If LLMs simply harvest existing content, process it together and reflect it back at us, what is the deal with elementary grade errors like this. Well, I have a very informal, and no doubt badly-informed theory. If you look around – I’m not reproducing any images because I know how quickly and how egregiously upset copyright lawyers can get about these things – there are different maps if the US, with a text which is name-like, but not a name, occupying the space where the name would normally be. These include standard abbreviations (AL, CA etc); a map (apparently) with states’ names translated literally in to Chinese and back again, licence plate mottos , and a map of US states – somewhat amusingly – showing the names of the hardest town to pronounce in each (I can see that “if you’re going to Zxyzx, California, be sure to wear some flowers in your hair” would never have caught on in quite the same way), and so on and so very much on. My – quite genuine – question to those who know more about these things than me is, are these non-names confusing the heck out of LLMs? Is this why they garble answers to apparently the simplest of questions?

In some ways I would like to think so. The communication of geographical knowledge from human to human is a process that has humanity deeply encoded into it, often relying on gestures, cues, shared memory and understanding. Even something as basic as the familiar shape of California draws both the eye and the mind, and along with it recollection of an equally familiar name. A human will reflexively and instinctively fill in the space with the word “California”, and if the word “Zxyzx” appears instead, it will detect a pronunciation (and spelling) challenge instead of the name it was expecting. A LLM on the other hand will see “Zxyzx” and ingest it, along with tens of thousands of other online Californias. And then mildly embarrass itself when asked a simple question about it.

It is, as they say, just a thought.